Public Docs

【模型量化】深度学习模型量化 & 量化理论 & 各平台的量化过程 & 硬件加速

【TVM】TI关于TVM的使用测试与分析

【LLM&LVM】大模型开源工程思维导图

SmartSip

【北航卓越工程师】《汽车前沿技术导论:智能驾驶》讲义

【工具链】Yocto使用介绍——使用Yocto创建一个树莓派的系统镜像

【工具链】使用ssh+dialog指令设定服务器指定用户仅容器访问

【推理引擎】一篇关于模型推理的详细对比与学习

【推理引擎】关于TVM中的Schedule优化详解(On going)

【LLM微调】使用litgpt进行私有数据集模型微调的测试总结

【TVM】在TVM Relay中创建一个自定义操作符

【STT+LLM+TTS】如何使用语音转文字模型+大预言模型+语音生成模型完成一个类人的语音交互机器人

【RAG】 通过RAG构建垂直领域的LLM Agent的方法探索

【RAG】GraphRAG精读与测试(On going)

【AI Agent】MetaGPT精读与学习

【AI Base】Ilya Sutskever 27篇必读论文分享清单

【Nvidia】Jetson AGX Orin/ Jetson Orin nano 硬件测试调试内容(On going)

【BI/DI】LLM Using in BI Testing Scenario (On going)

【Nvidia】How to Activate a Camera on Nvidia Platform in Details

【RAS-PI】树莓派驱动开发

【行业咨询阅读】关注实时咨询和分析

【mobileye】2024 Driving AI

【mobileye】SDS_Safety_Architecture

【yolo】yolov8测试

【nvidia】Triton server实践

【alibaba】MNN(on updating)

【OpenAI】Triton(on updating)

【CAIS】关于Compound AI Systems的思考

【Nvidia】关于Cuda+Cudnn+TensorRT推理环境

【BEV】BEVDet在各个平台上的执行效率及优化(On Updating)

【Chip】AI在芯片设计和电路设计中的应用

【Chip】ChiPFormer

【Chip】关于布线的学习

【Chip】MaskPlace论文精读与工程复现优化

【gynasium】强化学习初体验

【Cadence】X AI

【transformer】MinGPT开源工程学习

【中间件】针对apollo 10.0中关于cyberRT性能优化的深度解读和思考

【Robotics】调研了解当前机器人开发者套件(on updating)

【Robotics】ROS CON China 2024 文档技术整理与感想总结(上2024.12.7,中2024.12.8,下场外产品)

【algorithm】关于模型、数据与标注规范的平衡问题

【nvidia】DLA的学习了解与使用

【nvidia】构建nvidia嵌入式平台的交叉编译环境(其他环境平台可借鉴)

【2025AI生成式大会】2025大会个人总结

【Robotics】 Create Quadruped Robot RL FootStep Training Environment In IsaacLab

【Robotics】如何一个人较为完整的完成一个机器人系统软件算法层面的设计与开发

【VLM】读懂多模态大模型评价指标

【VLM】大模型部署的端侧部署性能与精度评估方法与分析

【Nvidia】Jetson Orin 平台VLM部署方法与指标评测

【Database】向量数据库

【SoC】性能与功耗评估

【MCP】MCP探索

文档发布于【Feng's Docs】

-

+

首页

【Nvidia】Jetson AGX Orin/ Jetson Orin nano 硬件测试调试内容(On going)

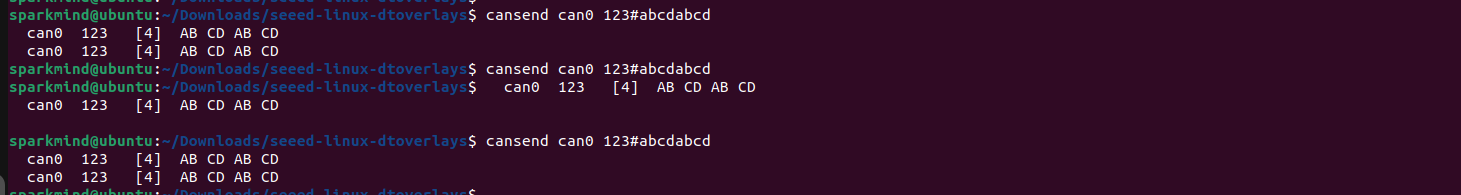

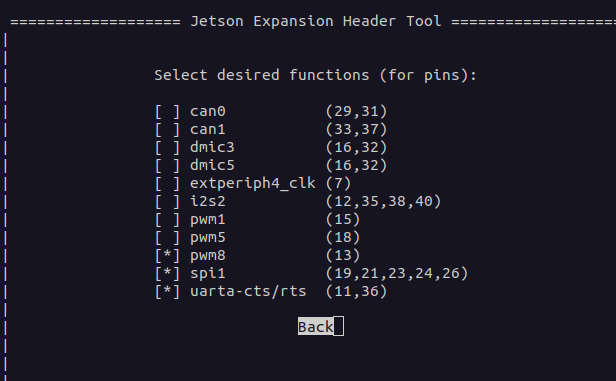

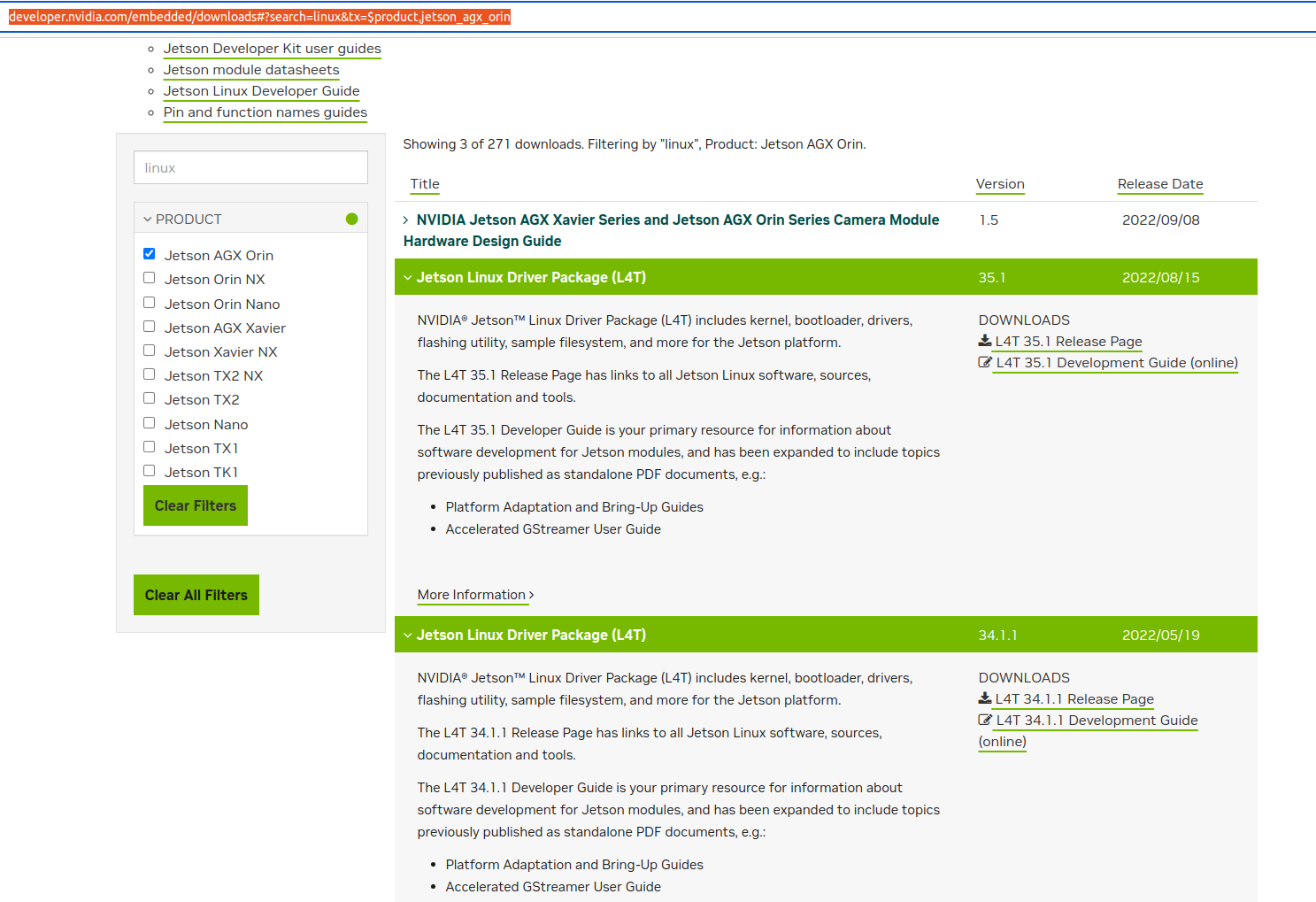

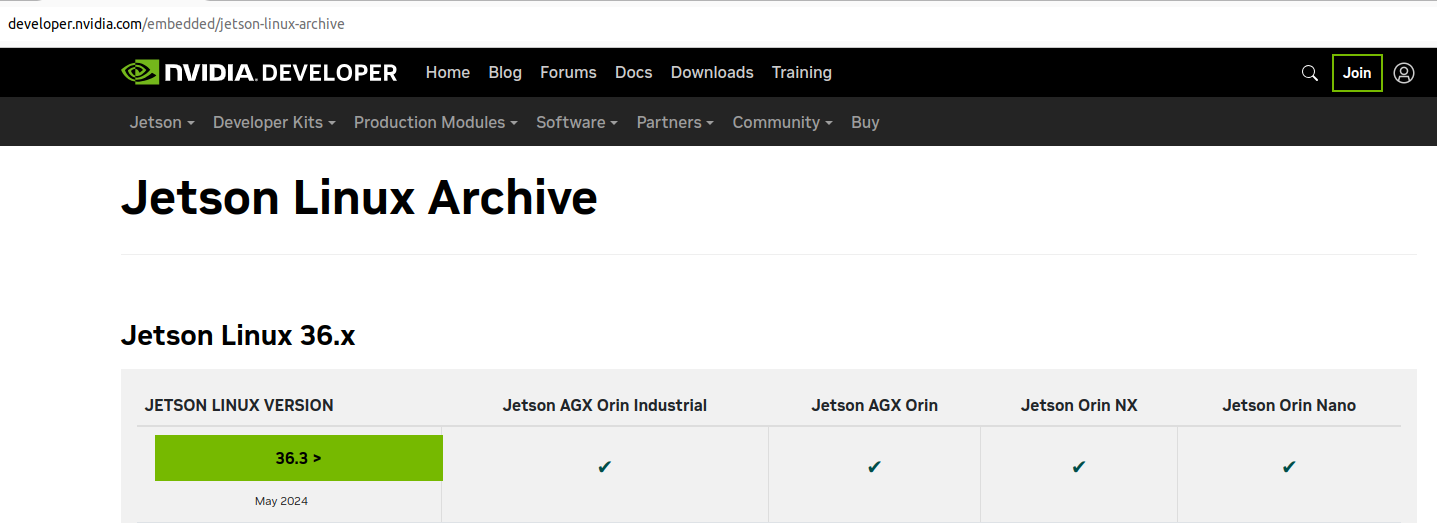

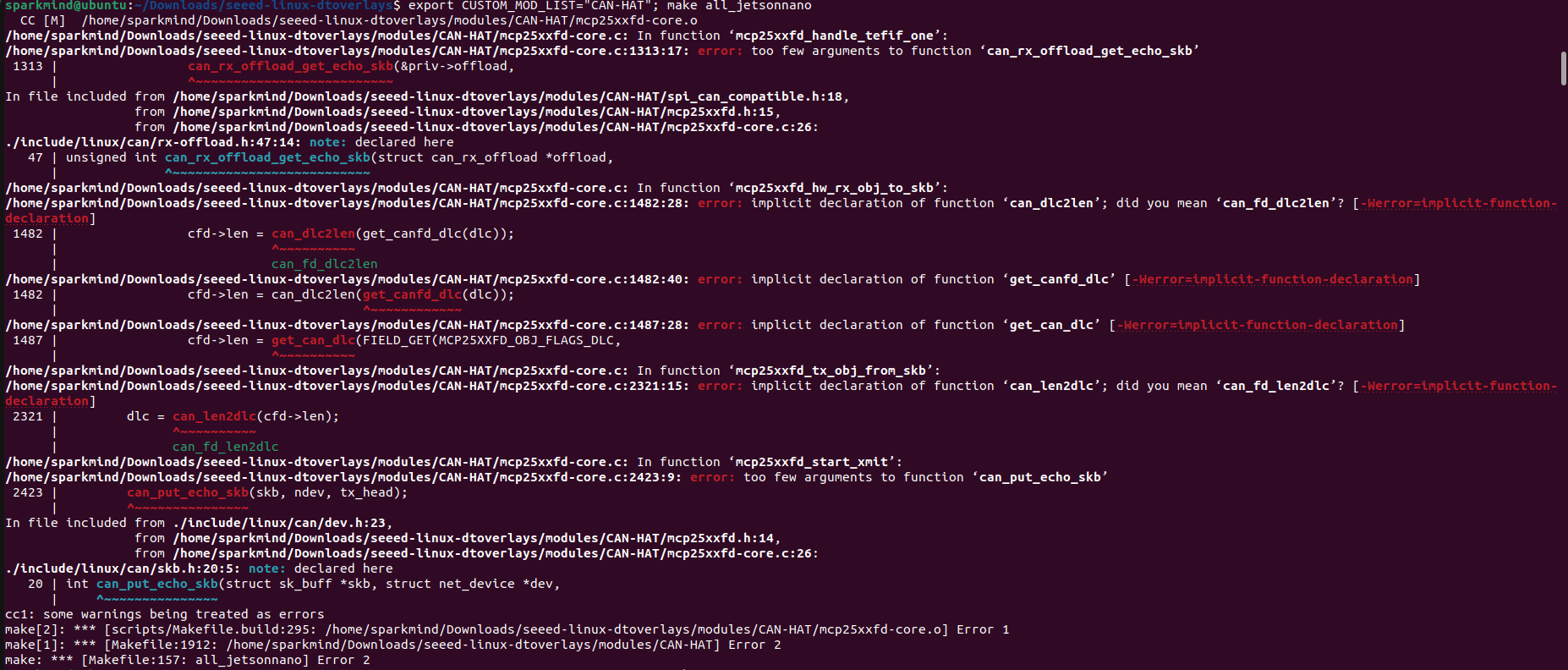

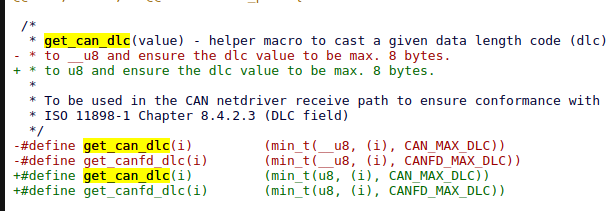

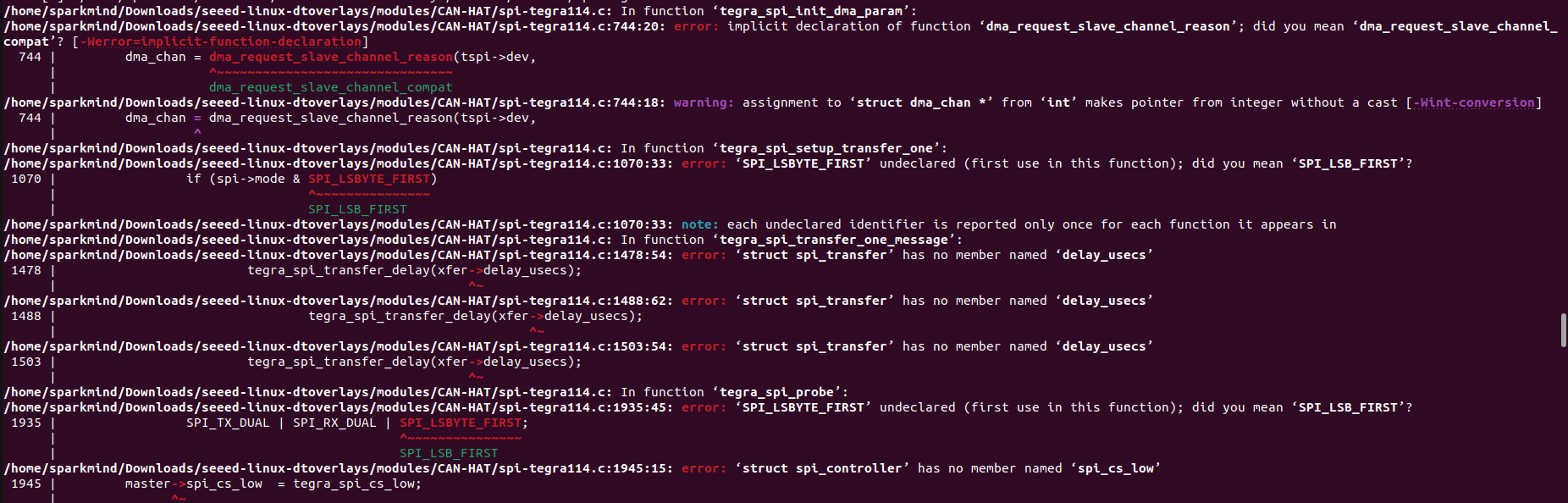

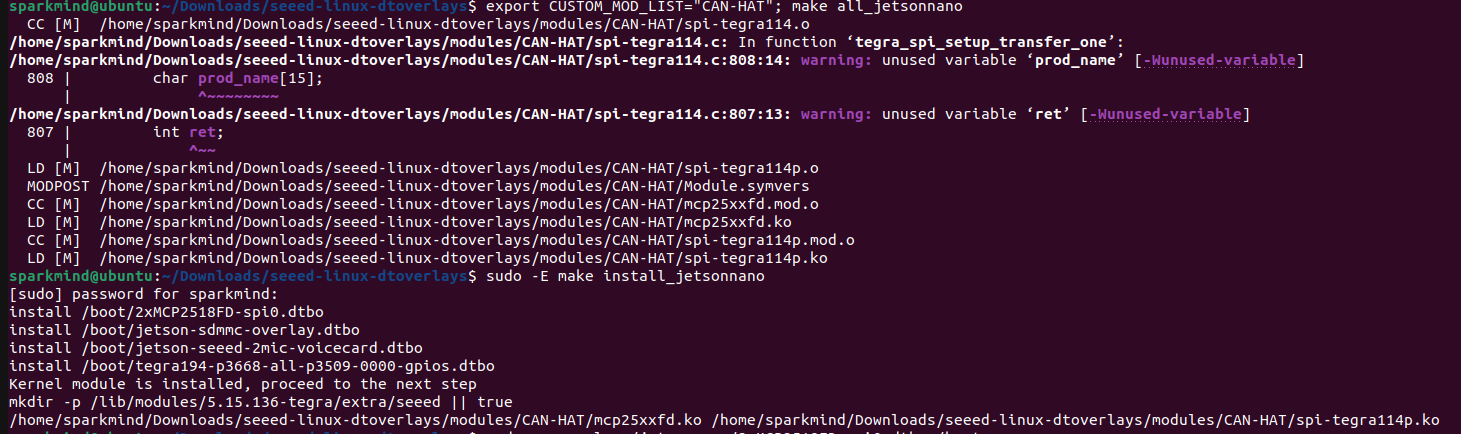

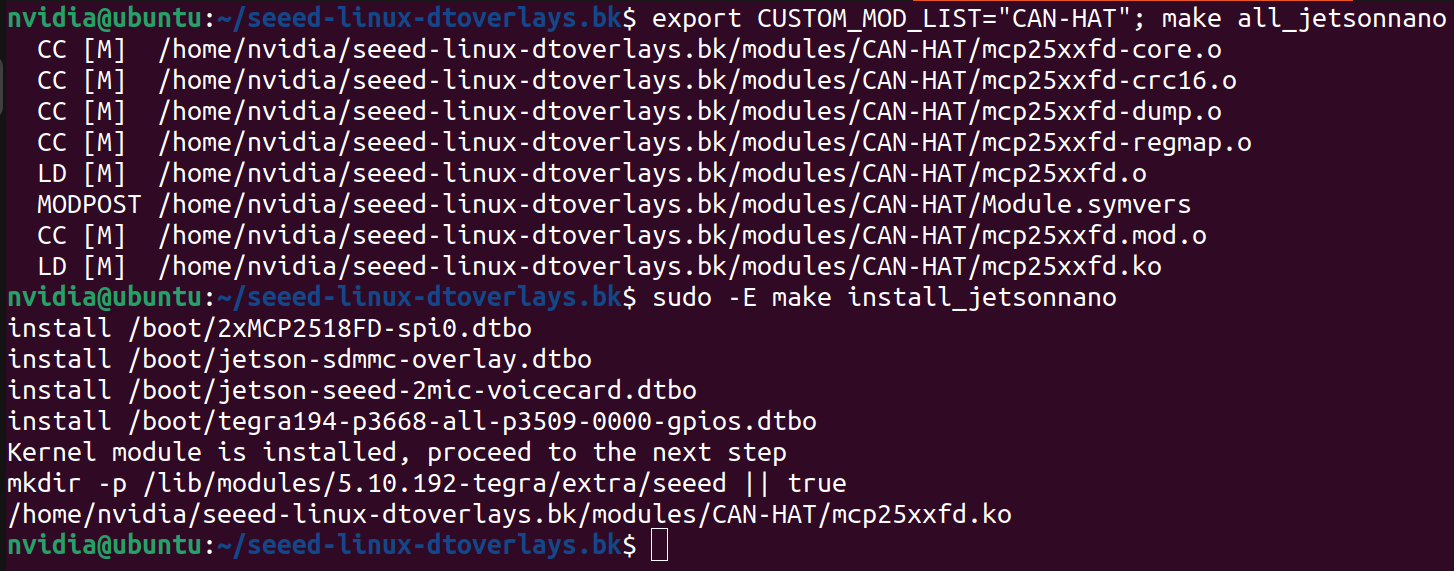

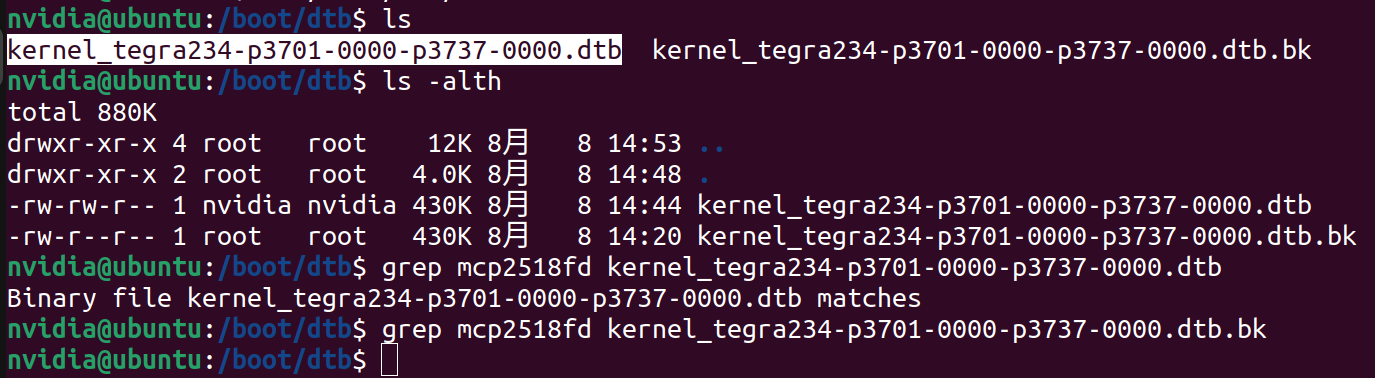

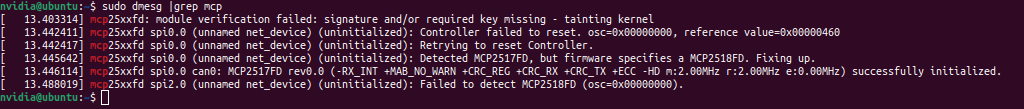

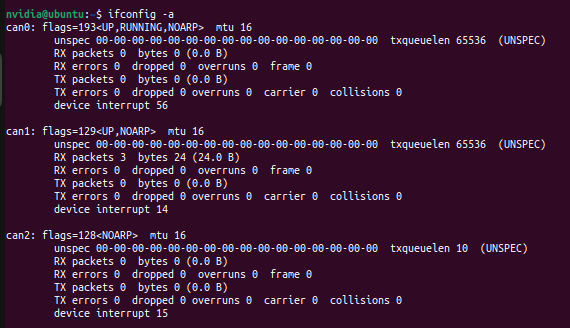

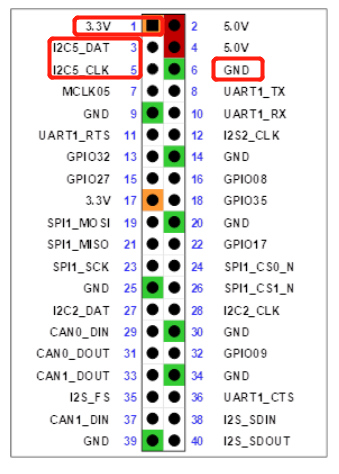

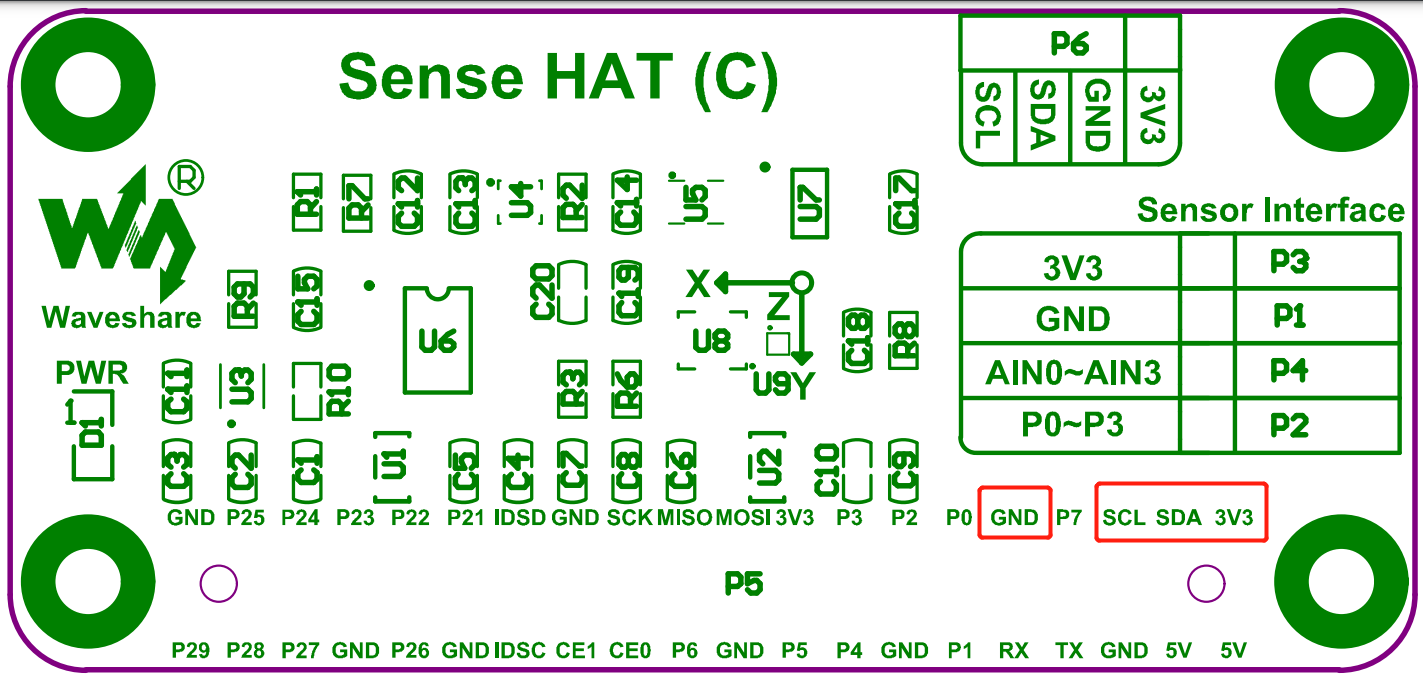

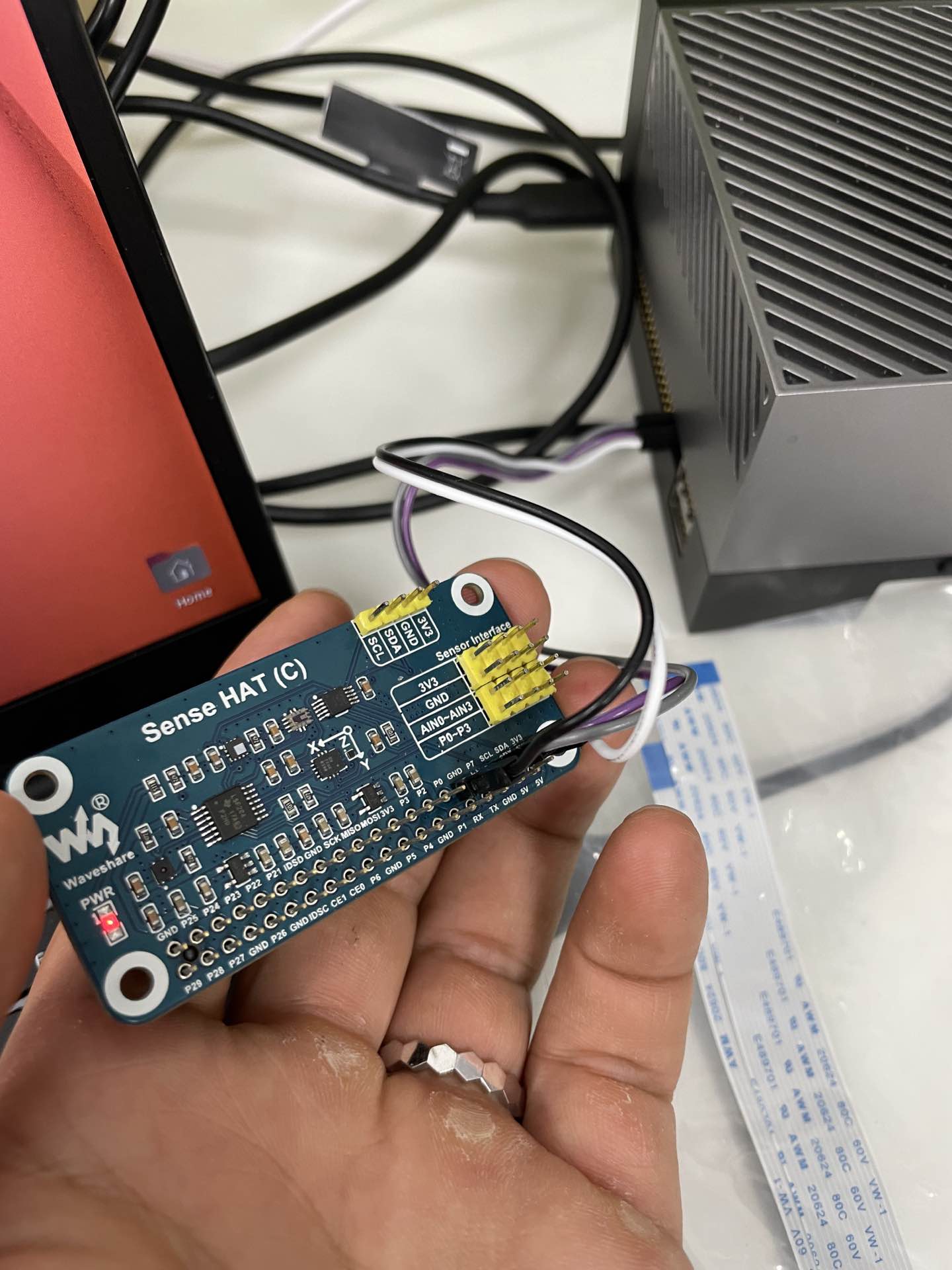

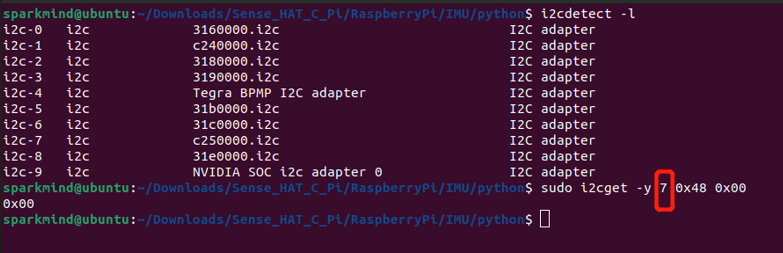

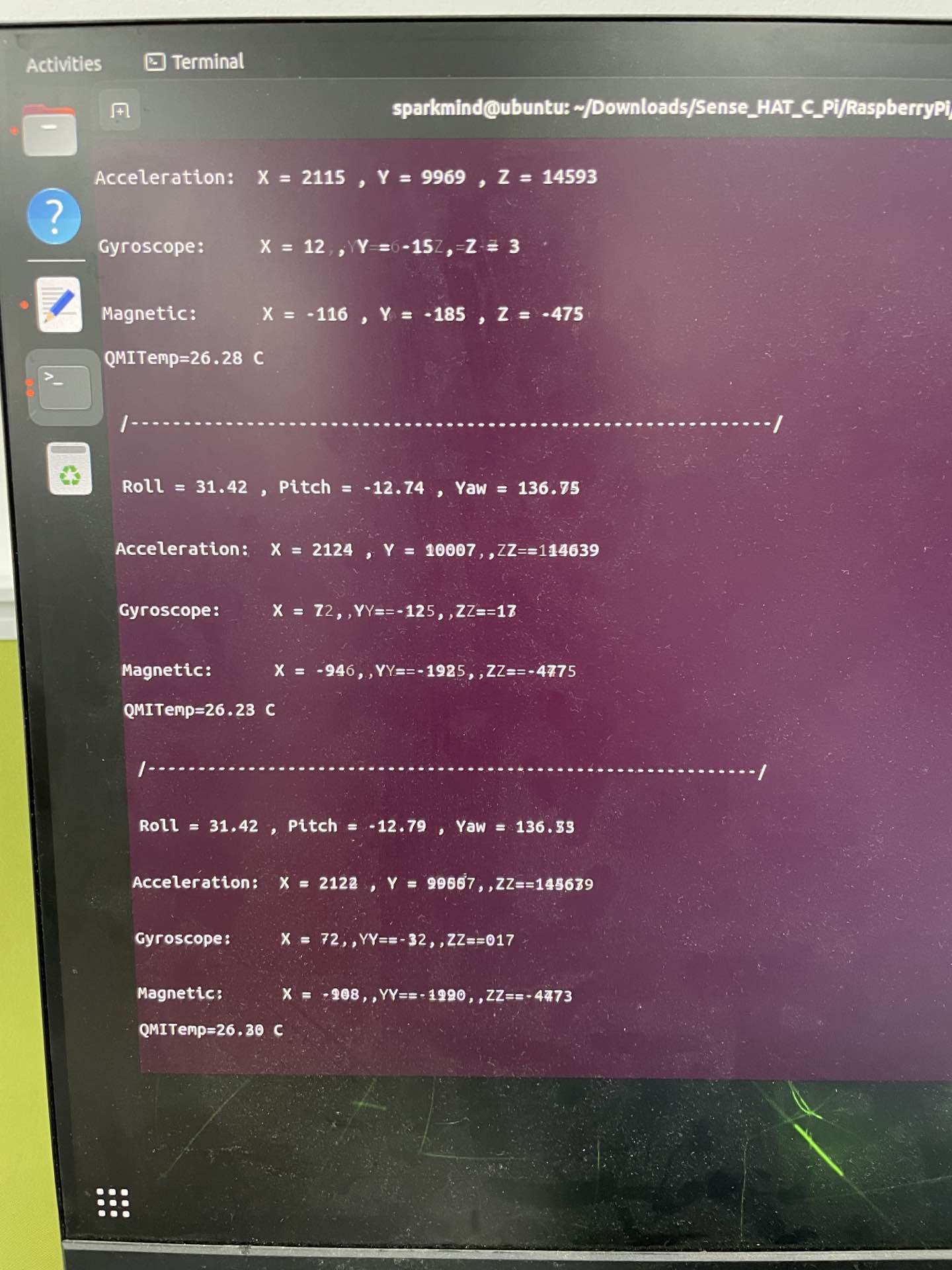

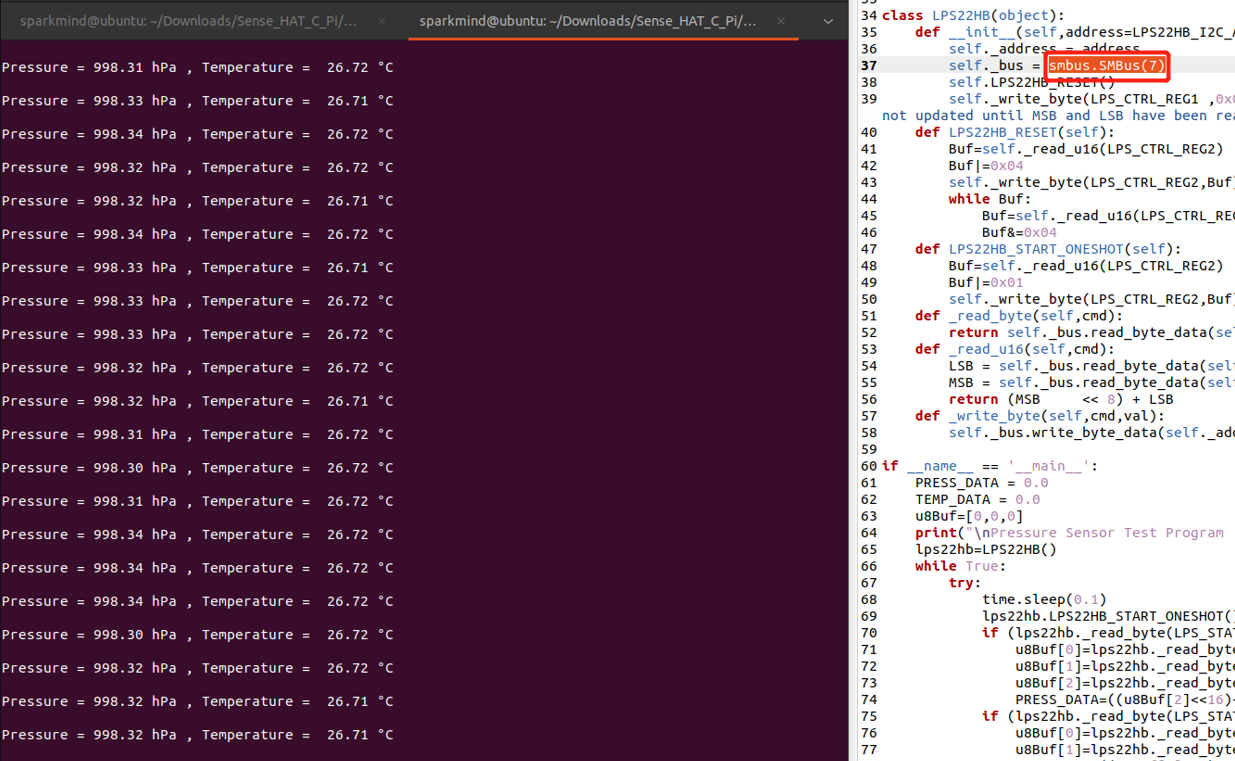

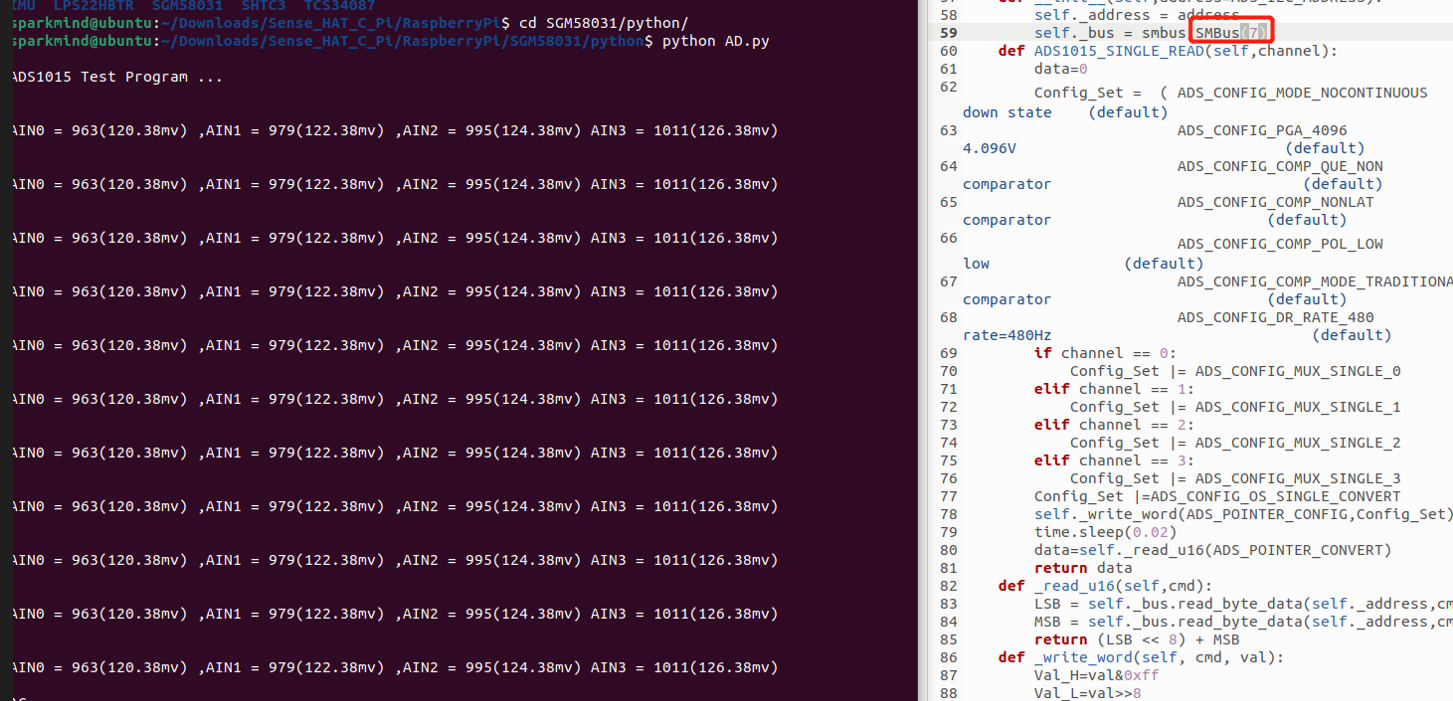

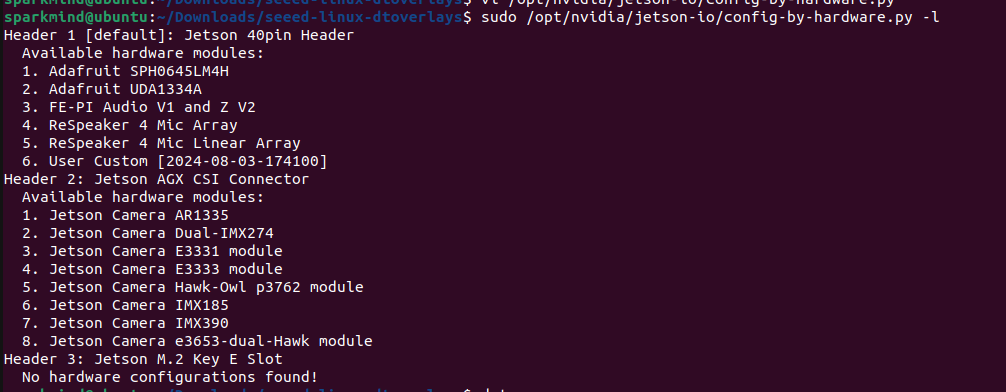

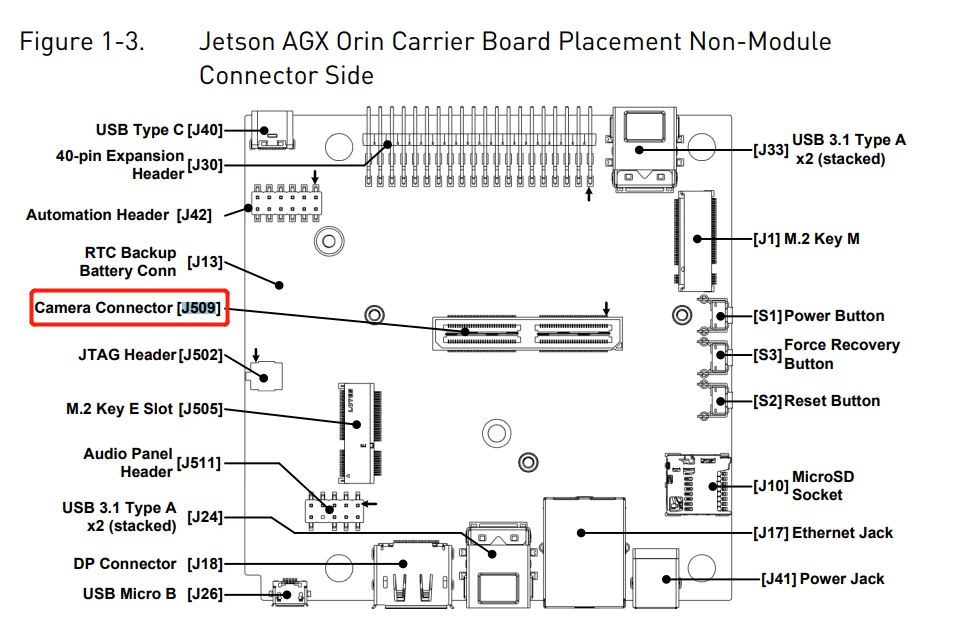

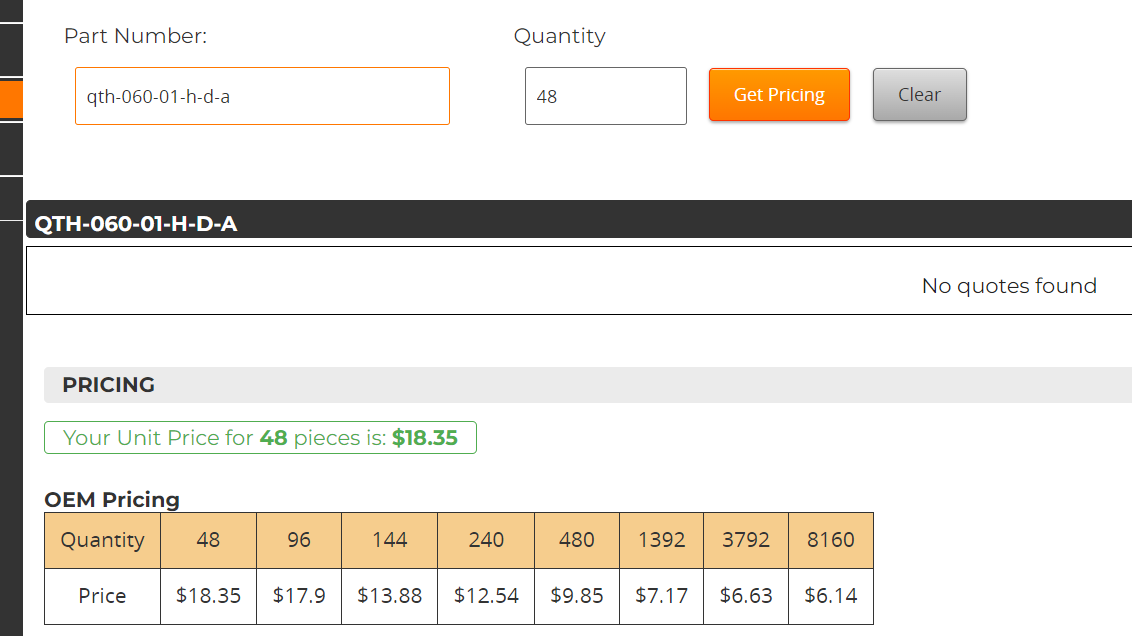

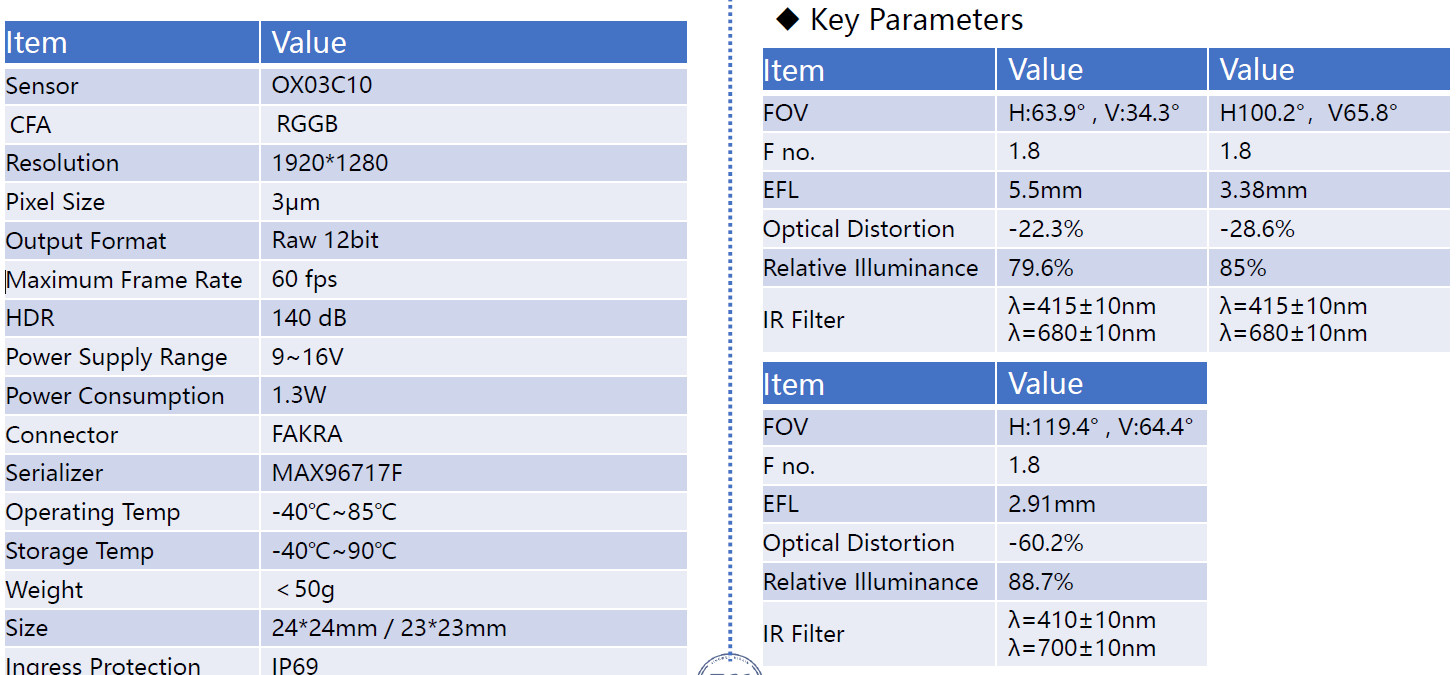

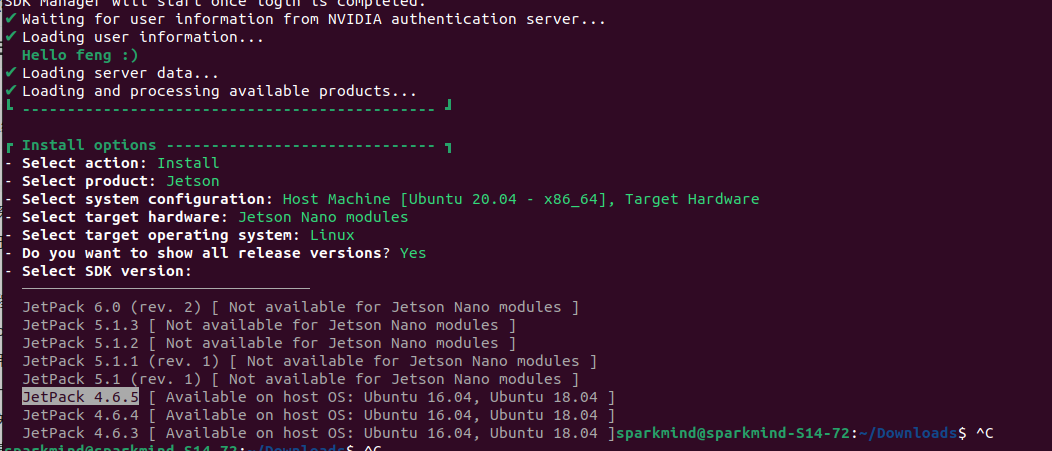

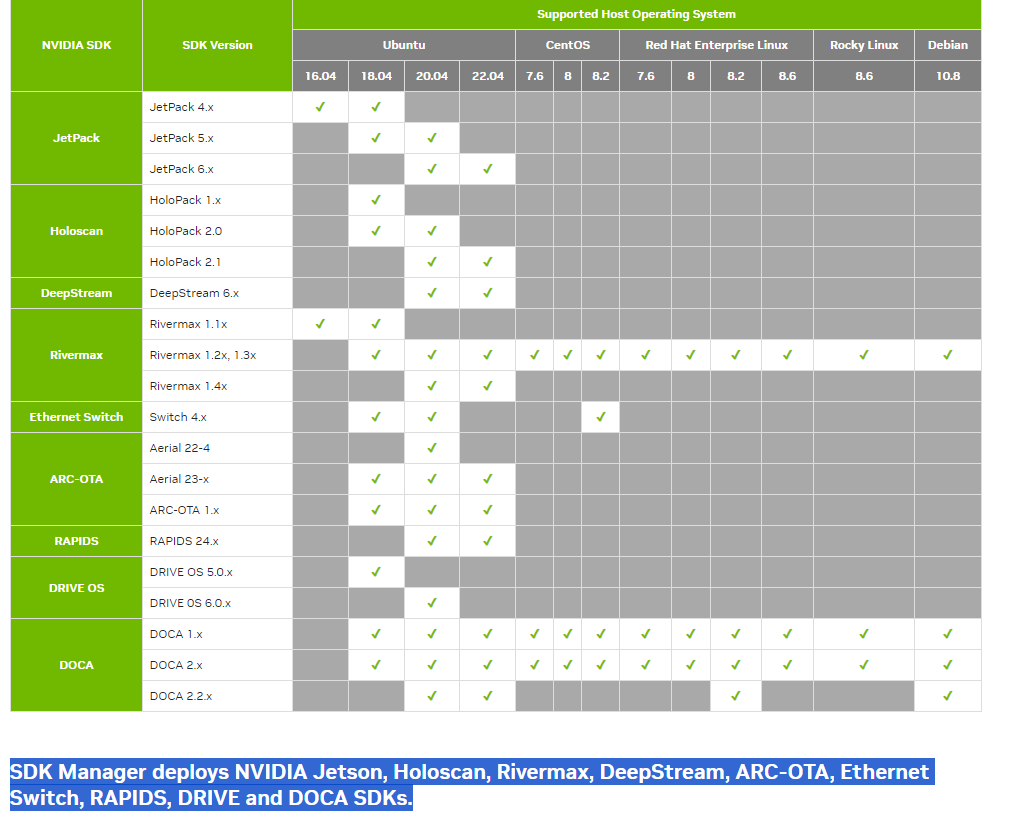

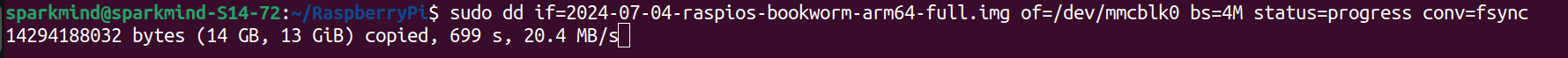

# 1. 行动项 * [x] 笔记本刷机(ubuntu20.04 -》 ubuntu 22.04,确认sdkmanager 应用,docker均可用)(`见2.0`) * [x] 刷写镜像 * [ ] ~~DRIVE(需要专门Drive 硬件,)~~ * [x] Jetpack jetson专用6.0刷写完成,CUDA AI等模块均完成刷写,container runtime安装get.docker.com需要翻墙 * [ ] ~~其他镜像列表见Annexe1,都需要专用硬件~~ * [ ] 硬件调试 * [x] can 2CH CAN FD HAT [Description](https://www.waveshare.net/wiki/2-CH_CAN_FD_HAT)(`见2.1`) * [x] sense HAT(C) [Description HAT(C)](https://www.waveshare.com/wiki/Sense_HAT_(C)) , [Schematic](https://www.waveshare.net/w/upload/a/af/Sense_HAT_%28C%29_Schematic.pdf)(`见2.2`) * [ ] 摄像头 `进行中` (`见2.3`) * agx orin 32g [userguide](https://developer.nvidia.com/embedded/learn/jetson-agx-orin-devkit-user-guide/index.html), [hardware layout](https://developer.nvidia.com/embedded/learn/jetson-agx-orin-devkit-user-guide/developer_kit_layout.html) * [ ] Orin上测试Bevformer,Sparse4D执行效率 * [ ] 镜像添加prompt-RT * [ ] Orin上测试机器人仿真机测试,omniverse+issacsim+orin执行 * [ ] ~~摸一下Orin的Drive都有哪些功能~~ * [ ] 摸一下Orin硬实时能力 * [ ] OTA Tools # 2 过程重点记录 ## 2.0 笔记本刷机 * use directly ubuntu22.04 to solve the problems below. * wifi driver https://forum.manjaro.org/t/realtek-rtl8111-8168-8411-cant-see-wifi-connections-after-manjaro-installation/126376/18 * ethernet driver: 8111/8168/8411 https://www.realtek.com/Download/List?cate_id=584 * pc 电脑刷机后网络无法使用 - ipv6禁用可恢复 ``` sudo nano /etc/sysctl.conf add: net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1 sudo sysctl -p sudo systemctl restart networking ``` ## 2.1 Two-Channel Isolated CAN FD Expansion HAT * `1. loopback test` * CAN(`ok`, in the picture 1st,3rd are good, 2nd is remove the short of Pin29 and Pin31 of can0): https://docs.nvidia.com/jetson/archives/r35.4.1/DeveloperGuide/text/HR/ControllerAreaNetworkCan.html?#loopback-test  * SPI(`ok, in 3.1`): https://forums.developer.nvidia.com/t/jetson-orin-spi-interface-with-mcp2518-spi-module/272853 * `2. links` * [https://forums.developer.nvidia.com/t/jetson-orin-spi-interface-with-mcp2518-spi-module/272853](https://forums.developer.nvidia.com/t/jetson-orin-spi-interface-with-mcp2518-spi-module/272853) * [https://wiki.seeedstudio.com/2-Channel-CAN-BUS-FD-Shield-for-Raspberry-Pi/#using-can-bus-shiled-with-jetson-nano](https://wiki.seeedstudio.com/2-Channel-CAN-BUS-FD-Shield-for-Raspberry-Pi/#using-can-bus-shiled-with-jetson-nano) * `3. steps` * 3.1. enable spi to check if the HAT workers ``` sudo /opt/nvidia/jetson-io/jetson-io.py # And then follow the CLI to enable spi header, save the configuration and exit. # The data could be received from CAN. ```  * 3.2. Use as a CAN Interface. `ifconfig -a` could find the CAN Interface * 3.2.1 (Optional if do the compilation on Host PC)Configure Environment on host machine ``` #Install Required Packages sudo apt update sudo apt install build-essential gcc make git libncurses5-dev bc flex bison #Install Additional Tools for Cross-Compilation sudo apt install gcc-aarch64-linux-gnu #Install Device Tree Compiler (DTC) sudo apt install device-tree-compiler ``` * 3.2.2 Download jeston linux source, 36.3 is what we used version https://developer.nvidia.com/embedded/downloads#?search=linux&tx=$product,jetson_agx_orin  https://developer.nvidia.com/embedded/jetson-linux-archive  https://developer.nvidia.com/embedded/jetson-linux-r363 * 3.2.3 Modified the code to adapt new linux kernel and build the driver * I checked the pin40 of the Jetson Nano. The pin40 of SPI is exectly the same so https://wiki.seeedstudio.com/2-Channel-CAN-BUS-FD-Shield-for-Raspberry-Pi/#using-can-bus-shiled-with-jetson-nano this project could be used. Follow this link to compile it on the devkit, you may meet the error below.  * Function `can_rx_offload_get_echo_skb` and `can_put_echo_skb` are because the `rx-offload.h` and `rx-offload.c` file update in the linux kernal. Replace them and modify the code. (`I will give all the modification in the Annexe`) * Function `get_canfd_dlc` and `get_can_dlc` could be solved by define a new macro  * The other errors follow the suggestions in color green. It is because the modification in `can-utils/include/linux/can/lib.h` * For the errors in `spi-tegra114.c`, just replace the file with the newest version in kernal file.  * The compilation could be fine.  * 3.2.4 Modify the device tree Replay by this link: https://forums.developer.nvidia.com/t/jetson-agx-orin-spi-interface-with-2-ch-can-fd-hat-mcp2518fd-spi-module/302264 Solution: https://elinux.org/Jetson/L4T/peripheral/#MCP2515_Verification the file for JetPack 6.0 is in link 36.2. ==ps: Please check carefully the model that you use. For example I used P3730(with P3701 as orin and P3737 as carrier board, so what I used as dtb is something like *p3737-0000+p3701-0000.dtb*)==  * 4. Result: * Jetpack 5.1.3 - 5.1.x  1. driver build with no error  2. the device tree file dtb sames changed  3. Driver Added  4. Interface appeared  * Jetpack 6.3 - 6.x: follow the steps in `steps 3` which is exactly the modification done for Jetpack6x * 5. ==Attention==: For Jetson AGX which have only one spi-IO from the jetson-io list. It needs to use one spi channel to control 2CAN mode. ## 2.2 Sense HAT(C)调试 * 硬件连接,指示灯亮,供电正确    * 数据调试 * Orin I2C5 对应7,修改各个传感器python测试脚本对应的I2C通道  * IMU正常  * LPS22HB压力温度传感器正常  * SGM58031正常  ## 2.3 相机调试 * Availble hardware   * 接插件/摄像头 * Orin上(J509) Samtec QSH-060-01-H-D-A * 对应接插件型号 Samtec QTH-060-01-H-D-A 官网48个起买,价格如下。淘宝上买了2个,`确认,无法直接连接FPC,需要买与Jetson AGX Orin能够适配的载板。`  * [完整连接方案sony-imx327-ultra-low-light-camera](https://www.e-consystems.com/nvidia-cameras/jetson-agx-xavier-cameras/sony-imx327-ultra-low-light-camera.asp) * [IMX219](https://www.digikey.com/en/products/detail/seeed-technology-co.,-ltd/114992263/12396924?utm_adgroup=&utm_source=google&utm_medium=cpc&utm_campaign=PMax%20Shopping_Product_Low%20ROAS%20Categories&utm_term=&utm_content=&utm_id=go_cmp-20243063506_adg-_ad-__dev-c_ext-_prd-12396924_sig-Cj0KCQjwh7K1BhCZARIsAKOrVqEpIVCOvcSxv1rX5EXwRPPhG4f3wZsL6ohing94GYLM2lEHFhO1dvwaAh3dEALw_wcB&gad_source=1&gclid=Cj0KCQjwh7K1BhCZARIsAKOrVqEpIVCOvcSxv1rX5EXwRPPhG4f3wZsL6ohing94GYLM2lEHFhO1dvwaAh3dEALw_wcB) * 目前手上的的摄像头参数为如图所示,串行芯片max96717F手册link,MAX96716A/B/F, a quad-port MAX96712 or MAX96722 等几款解串都可以使用。https://www.analog.com/media/en/technical-documentation/data-sheets/max96717f.pdf,   * https://www.sensing-world.com/en/pd.jsp?id=20&fromMid=1656&recommendFromPid=0 * Useful link * [connection](https://forums.developer.nvidia.com/t/how-can-i-connect-this-camera/140966) * [Hardware Design Guide](https://developer.download.nvidia.cn/assets/embedded/secure/jetson/xavier/docs/Jetson_AGX_Xavier_and_Orin_Series_Camera_Design_Guide_DG-09364-001_v1.5.pdf?myHpjLkYdPSrdnl-2A5gxWP3LyA362Vz-CJaygWRP5uAwu6V8d3-4jJ0nqLQeKrHsElwfnCAoihXique0RyYYp4OFO034MqrGynRxOuRSpR2xdchw978Y7kFmdp-JwU1Gu7l8oHJuHjBK9elkSyrCeWzqNJsaPmFEuGg-E1s0Ux4KmaenxziRqCOev7lzRXY0cG9L1kDkx-KzYPKA54nyG9hOT3RHPv3cfNQLqzKfzmk6vwL&t=eyJscyI6ImdzZW8iLCJsc2QiOiJodHRwczovL3d3dy5nb29nbGUuY29tLyJ9) * [https://github.com/NVIDIA-AI-IOT/argus_camera](https://github.com/NVIDIA-AI-IOT/argus_camera) * [https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_argus_camera](https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_argus_camera) * Try: * Try on Jetson Nano. (The maximun Jetpack version is JetPack 4.6.5, I checked the kernal code it exactly have a lot of difference between 4.6.5(Linux 32.7.5) 5.1.2(Linux 35.4.1))  * Try on Jetson TX2 NX. It is the same as Jetson Nano. It is a 4GB Mem version that nano develop kit could be used as this link saild. https://forums.developer.nvidia.com/t/jetson-tx2-nx-on-jetson-nano-dev-board-b01/226011 * Try on Jetson Orin nano which could use the jetpack 5.1.2. ### 2.3.1 Steps to Adapt camera and gsml board on Rpi * 1. Use camera with max9295a and make it work on orin nx(Jetpack 5.1.2) with is exactly the demo given by saller. * 2. Tried to modify the demo code at the register part to make max9296a adapt max96717F, to allow camera with max96717F serialization chip. * 3. Put the second step on Raspberrypi 4. The Core part is below: * 3.1. Check how to modify the device tree on raspberry pi4 * 3.2. Check how to adapt the camera driver on raspberry pi4 * 3.3. Try to catch the stream, normally use the `v4l2 framework` ## 2.4 AI能力测试 ## 2.5 实时内核 * https://docs.nvidia.com/jetson/archives/r35.1/DeveloperGuide/text/SD/Kernel/KernelCustomization.html ## 2.6 机器人能力测试Omniverse+IssacSim+AGX Orin ## 2.7 OTA https://docs.nvidia.com/jetson/archives/r36.3/DeveloperGuide/SD/SoftwarePackagesAndTheUpdateMechanism.html#updating-jetson-linux-with-image-based-over-the-air-update # 3 Annexe ## 3.1 Nvidia SDKManager  ``` 1. NVIDIA Jetson NVIDIA Jetson 是 NVIDIA 开发的一系列用于人工智能和计算机视觉的嵌入式计算平台。Jetson 平台包含多个型号的开发板和模块,支持 AI、机器学习、深度学习、机器人、无人机和物联网设备的开发。它们通常用于需要高性能计算能力但受限于功耗和空间的应用场景。 2. Holoscan NVIDIA Holoscan 是一个用于医疗成像和边缘 AI 的实时数据处理平台。Holoscan 平台能够处理来自多个传感器和设备的大量数据流,使得医疗专业人员能够进行实时分析和决策。它特别适用于需要高性能数据处理的医疗应用,如超声波成像、内窥镜检查和手术导航。 3. Rivermax NVIDIA Rivermax 是一个高效的网络传输 SDK,专为需要高带宽、低延迟的应用而设计。它主要用于专业音视频广播行业,支持通过 IP 网络进行高质量的媒体传输。Rivermax 可以优化数据流在 NVIDIA 网卡上的传输性能,提供无缝的媒体流解决方案。 4. DeepStream NVIDIA DeepStream 是一个流式处理分析 SDK,专为视频分析应用而设计。DeepStream 提供了从摄像头、传感器或文件中摄取视频流并执行实时分析的工具。它常用于智能城市、零售分析、安全监控和工业检测等领域,支持使用 GPU 进行高效的视频处理和人工智能推理。 5. ARC-OTA NVIDIA ARC-OTA (Automated, Reliable, Connected - Over-the-Air) 是一个用于远程更新和管理嵌入式设备的软件解决方案。ARC-OTA 允许开发者通过无线网络向 Jetson 或其他嵌入式设备推送软件更新和补丁,确保设备始终运行最新的软件版本。它提供了一种安全、可靠且高效的方式来维护设备的软件生态。 6. Ethernet Switch NVIDIA Ethernet Switch 指的是 NVIDIA 提供的以太网交换机产品,通常用于数据中心和企业网络中。这些交换机提供高吞吐量、低延迟和先进的网络功能,以支持现代应用程序和虚拟化环境。NVIDIA 的以太网交换机结合了 Mellanox 技术,适合于需要高性能网络基础设施的企业。 7. RAPIDS NVIDIA RAPIDS 是一个基于 GPU 的数据科学和机器学习库集。它支持加速数据处理和分析,利用 CUDA 并行计算框架提升机器学习模型的训练和推理速度。RAPIDS 提供类似于 pandas、scikit-learn 等 Python 库的 API,适合需要处理大规模数据集并进行快速分析的应用。 8. DRIVE NVIDIA DRIVE 是 NVIDIA 的自动驾驶平台,提供了软硬件工具用于开发自动驾驶汽车。DRIVE 平台集成了 AI 计算、传感器处理、仿真和测试工具,支持 L2+ 至 L5 级别的自动驾驶开发。NVIDIA DRIVE 适用于车载信息娱乐系统、自动驾驶控制和数据处理等多个领域。 9. DOCA NVIDIA DOCA (Data Center Infrastructure on a Chip Architecture) 是一个用于加速数据中心应用程序的编程框架。DOCA 提供了一套 API 和库,允许开发者在 NVIDIA DPU(数据处理单元)上构建高效的网络、安全和存储解决方案。它适用于需要增强性能和安全性的现代数据中心基础设施。 这些 SDK 和平台涵盖了从嵌入式 AI 到自动驾驶再到数据中心的广泛应用领域,展示了 NVIDIA 在硬件和软件结合方面的强大能力。 ``` ## Annexe 3.2 mcp22518fd modification for build ``` c // mcp25xxfd.h #define get_can_dlc(i) (min_t(u8, (i), CAN_MAX_DLC)) #define get_canfd_dlc(i) (min_t(u8, (i), CANFD_MAX_DLC)) ``` ``` c // mcp25xxfd-core.c static netdev_tx_t mcp25xxfd_start_xmit(struct sk_buff *skb, struct net_device *ndev) { struct mcp25xxfd_priv *priv = netdev_priv(ndev); struct mcp25xxfd_tx_ring *tx_ring = priv->tx; struct mcp25xxfd_tx_obj *tx_obj; const canid_t can_id = ((struct canfd_frame *)skb->data)->can_id; u8 tx_head; int err; // Declare frame_len and canfd_frame at the beginning unsigned int frame_len; struct canfd_frame *cf; if (can_dropped_invalid_skb(ndev, skb)) return NETDEV_TX_OK; mcp25xxfd_log(priv, can_id); if (tx_ring->head - tx_ring->tail >= tx_ring->obj_num) { netdev_dbg(priv->ndev, "Stopping tx-queue (tx_head=0x%08x, tx_tail=0x%08x, len=%d).\n", tx_ring->head, tx_ring->tail, tx_ring->head - tx_ring->tail); mcp25xxfd_log_busy(priv, can_id); netif_stop_queue(ndev); return NETDEV_TX_BUSY; } tx_obj = mcp25xxfd_get_tx_obj_next(tx_ring); mcp25xxfd_tx_obj_from_skb(priv, tx_obj, skb, tx_ring->head); /* Stop queue if we occupy the complete TX FIFO */ tx_head = mcp25xxfd_get_tx_head(tx_ring); tx_ring->head++; if (tx_ring->head - tx_ring->tail >= tx_ring->obj_num) { mcp25xxfd_log_stop(priv, can_id); netif_stop_queue(ndev); } // Initialize canfd_frame pointer cf = (struct canfd_frame *)skb->data; // Determine the frame length if (cf->can_id & CAN_RTR_FLAG) { // Remote Transmission Request frames have no data frame_len = 0; } else if (cf->can_id & CAN_EFF_FLAG) { // Extended Frame Format (EFF) with CAN FD support frame_len = (cf->len > CAN_MAX_DLEN) ? CAN_MAX_DLEN : cf->len; } else { // Standard Frame Format (SFF) frame_len = (cf->len > CAN_MAX_DLEN) ? CAN_MAX_DLEN : cf->len; } // Call can_put_echo_skb with the additional frame_len parameter can_put_echo_skb(skb, ndev, tx_head, frame_len); err = mcp25xxfd_tx_obj_write(priv, tx_obj); if (err) goto out_err; return NETDEV_TX_OK; out_err: netdev_err(priv->ndev, "ERROR in %s: %d\n", __func__, err); mcp25xxfd_dump(priv); mcp25xxfd_log_dump(priv); return NETDEV_TX_OK; } ``` ``` c // mcp25xxfd-core.c static int mcp25xxfd_handle_tefif_one(struct mcp25xxfd_priv *priv, const struct mcp25xxfd_hw_tef_obj *hw_tef_obj) { struct mcp25xxfd_tx_ring *tx_ring = priv->tx; struct net_device_stats *stats = &priv->ndev->stats; u32 seq, seq_masked, tef_tail_masked; int err; unsigned int frame_len = 0; // Declare variable to store frame length seq = FIELD_GET(MCP25XXFD_OBJ_FLAGS_SEQ_MCP2518FD_MASK, hw_tef_obj->flags); /* Use the MCP2517FD mask on the MCP2518FD, too. We only * compare 7 bits, this should be enough to detect * net-yet-completed, i.e. old TEF objects. */ seq_masked = seq & FIELD_GET(MCP25XXFD_OBJ_FLAGS_SEQ_MCP2517FD_MASK, -1UL); tef_tail_masked = priv->tef.tail & FIELD_GET(MCP25XXFD_OBJ_FLAGS_SEQ_MCP2517FD_MASK, -1UL); if (seq_masked != tef_tail_masked) return mcp25xxfd_handle_tefif_recover(priv, seq); mcp25xxfd_log(priv, hw_tef_obj->id); // Call can_rx_offload_get_echo_skb with the additional frame_len_ptr argument stats->tx_bytes += can_rx_offload_get_echo_skb(&priv->offload, mcp25xxfd_get_tef_tail(priv), hw_tef_obj->ts, &frame_len); // Pass the address of frame_len stats->tx_packets++; /* finally increment the TEF pointer */ err = regmap_update_bits(priv->map_reg, MCP25XXFD_REG_TEFCON, GENMASK(15, 8), MCP25XXFD_REG_TEFCON_UINC); if (err) return err; priv->tef.tail++; tx_ring->tail++; return mcp25xxfd_check_tef_tail(priv); } ``` ## Annexe 3.3 Build Nvidia Customization Kernal * Build the kernal https://docs.nvidia.com/jetson/archives/r34.1/DeveloperGuide/text/SD/Kernel/KernelCustomization.html `good practice`: https://forums.developer.nvidia.com/t/kernel-make-execution-creates-hardware-folder-on-my-desktop/262213/27 * Modifying the Kernel Configuration https://docs.nvidia.com/jetson/archives/r34.1/DeveloperGuide/text/AT/JetsonLinuxDevelopmentTools/DebuggingTheKernel.html?highlight=defconfig ## Annexe 3.4 If sdkmanager for docker cannot connect the target device link: https://forums.developer.nvidia.com/t/docker-sdk-manger-error/246313/7 ## Annexe 3.5 If you meet some errors below when flashing the device, it coule be the problem of `Power`, check whether the power of your adaptor is enough (An Interasting error) * skip generating mem_bct because sdram_config is not defined * failed to read rcm_state link: https://forums.developer.nvidia.com/t/docker-sdk-manger-error/246313 ## Annexe 3.6 Toolchain of Nvidia `check clearly ther version correspondant to each version between Jetpack/Linux/Toolchain/sdkmanager`, the errors could happen if the version are not match. * error meeting for flash on SD card: https://forums.developer.nvidia.com/t/jetson-orin-nano-devkit-disk-encryption-could-not-stat-device-dev-mmcblk0-no-such-file-or-directory/268976 * check the storage device that you use, NVMe(M.2 storage), SD Card or EMMC ## Annexe 3.7 About CSI Type https://www.arducam.com/raspberry-pi-camera-pinout/ ## Annexe 3.8 RaspberryPi Utils * Build kernal & dts: https://www.raspberrypi.com/documentation/computers/linux_kernel.html#download-kernel-source * Download image: https://www.raspberrypi.com/software/operating-systems/ * camera config: https://www.raspberrypi.com/documentation/computers/camera_software.html#mode * camera software: https://www.raspberrypi.com/documentation/computers/camera_software.html#tuning-files * use unxz command to transform xz file to img file and than flash the image to sdcard https://www.raspberrypi.com/documentation/computers/getting-started.html * if use image flasher always meet errors, tried to use `dd` command to flash the image to sdcard directly  ## Annexe 3.9 Develop camera sensor driver https://developer.ridgerun.com/wiki/index.php/Camera_Sensor_Basics

dingfeng

2024年8月19日 11:23

3698

0 条评论

转发文档

收藏文档

上一篇

下一篇

评论

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

PDF文档

PDF文档(打印)

分享

链接

类型

密码

更新密码